-

Capability

I love the take from Valve engineers on their philosophy for the Steam devices:

We don’t block anyone from doing what they want to with their device. […] This is your device, your computer, you own it, you can mod it and extend it in any way.

Compare that to Apple’s stewardship where they decide what you can do with your devices. I’d love to be able to do more and experiment more, even if the experience would be far from polished and I might go back to a more regular setup. Wouldn’t it be exciting to:

- Run iPadOS on my iPhone with multi-tasking and driving an external display – like this post on reddit (via Craig Grannell)

- Run a desktop-class operating system on Vision Pro. The hardware is capable of it. It’s so strange that connecting even a lesser spec’ed Mac, let’s you do more.

- Create your own Apple Watch faces. I created a custom one for my wife’s Garmin watch as a little present, and it’s just lovely do be able to do that.

- Turn my AppleTV box into a tiny home server.

Apple makes incredibly capable hardware but what you can do with it is limited by Apple’s software: more “cap” than “ability”.

-

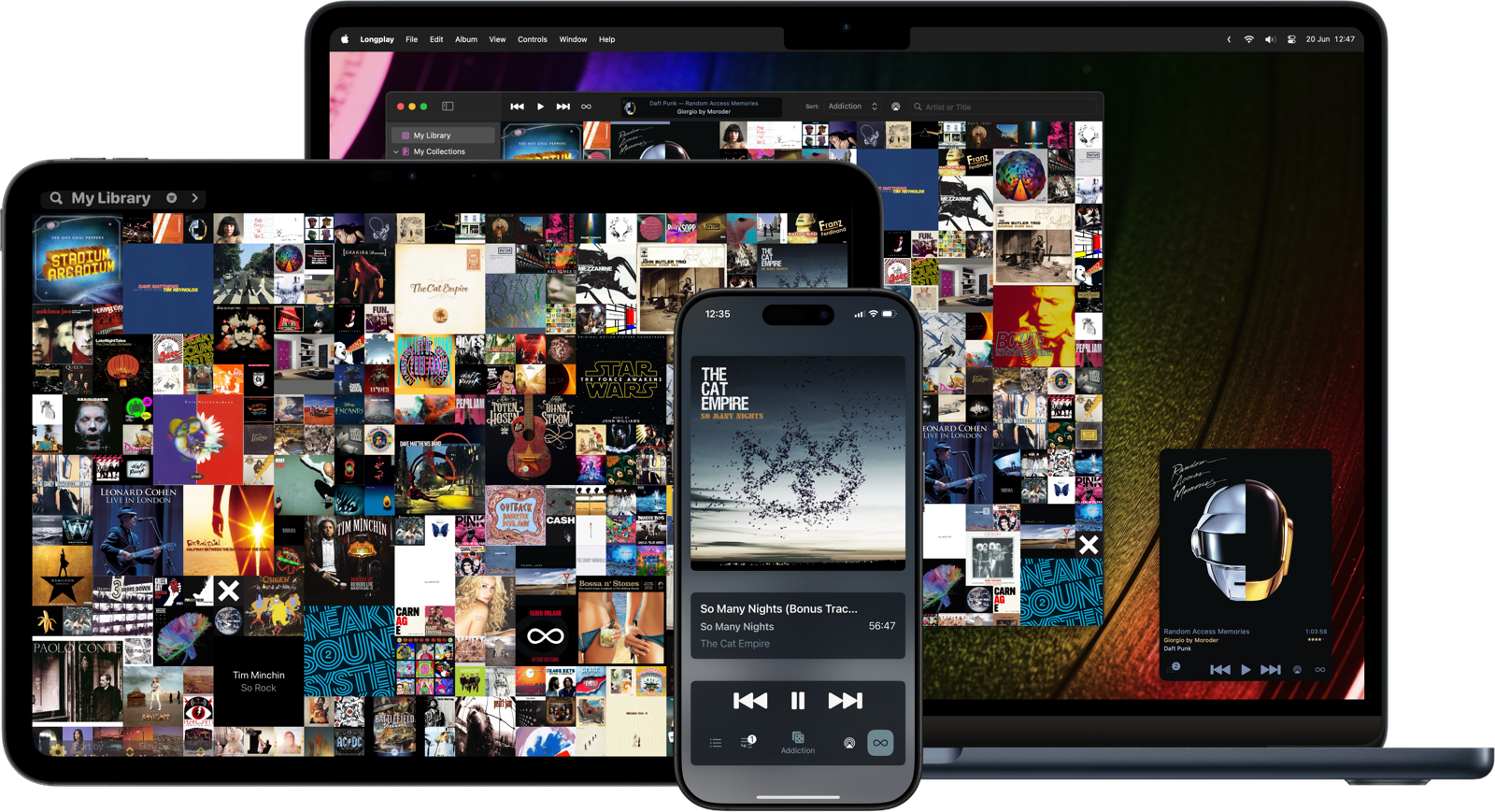

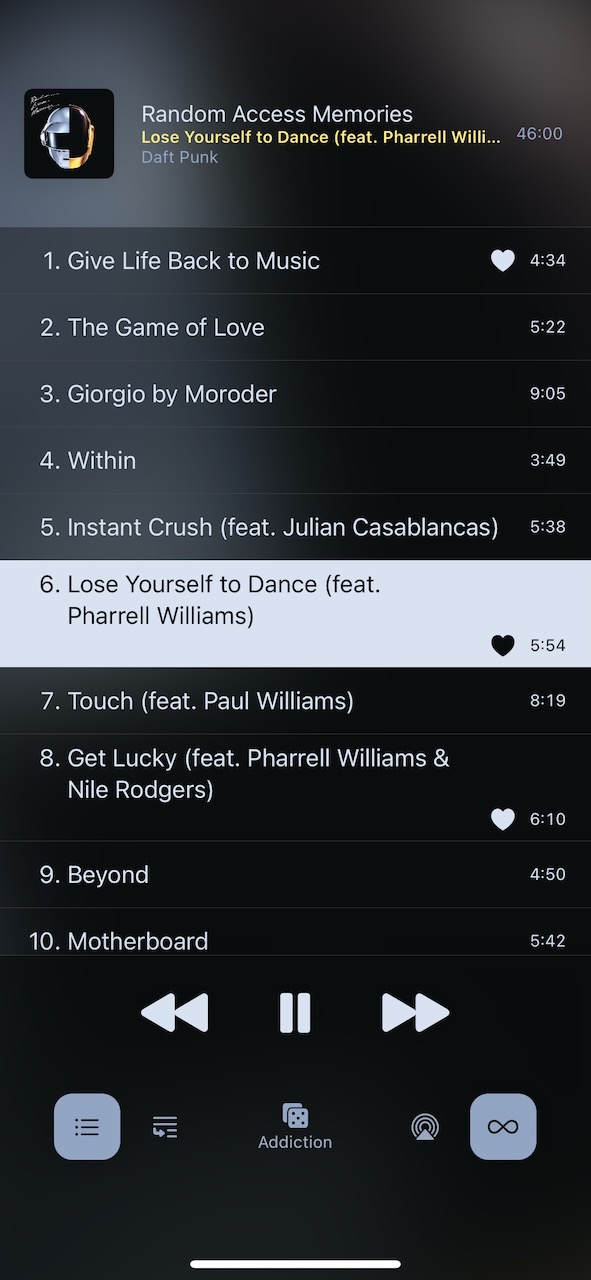

Longplay comes to the Mac

I am thrilled to be finally releasing Longplay for Mac today. Longplay is all about the joy of listening to entire albums and marvelling at their beautiful album artwork. It is built from the ground up for the Mac, with the familiar pretty album wall, a dedicated mini player, all the main features from iOS, plus Mac exclusives like AppleScript and a nifty MCP server. I love how it turned out. For all the details, check out longplay.rocks, where you can download the app to try yourself — you need to unlock to listen though — , or find it on the Mac App Store.

Read on for a bit of my developer’s commentary of this release.

What’s Longplay in a nutshell

If you can “hear” the start of the next track as the current one finishes and identify albums by their artwork, then Longplay is for you.

It filters out the albums where you only have a handful of tracks, and focusses on those complete or nearly complete albums in your library instead. It analyses your album stats to help you rediscover forgotten favourites and explore your library in different ways. You can organise your albums into collections, including smart ones. And you can go deep with automation support. Speaking of automation, there are two to touch on in more detail…

AppleScript in 2025? Didn’t you get the AI memo?

The Mac is great for providing quick access to anything, and I am a frequent user of any “quick open” capabilities to get to something specific with just a few keystrokes. I wanted that capability for Longplay, though I didn’t want yet another quick open flow that’s slightly different from those of other apps. Then it occurred to me, that I use Alfred for exactly that reason and what I really wanted was an Alfred workflow to find and play albums in Longplay quickly.

That sent me down the rabbit hole of AppleScript. I hadn’t added support for AppleScript to an app before, so had some catching up to do. The issue with that is that it’s not a priority for Apple, so there’s no up-to-date information or let alone a WWDC code along for how to add that to a SwiftUI or AppKit app. Whenever you as a developer end up sifting through the Documentation Archive, get the archival header on Ray Wenderlich tutorials, and the up-to-date tutorials are written in Objective-C, you know you went off the beaten path.

Digging through those and with the tremendous help of Daniel Jalkut and Michael Tsai on the AppKit Abusers slack, I got it working, added AppleScript support, and created my Alfred workflow.

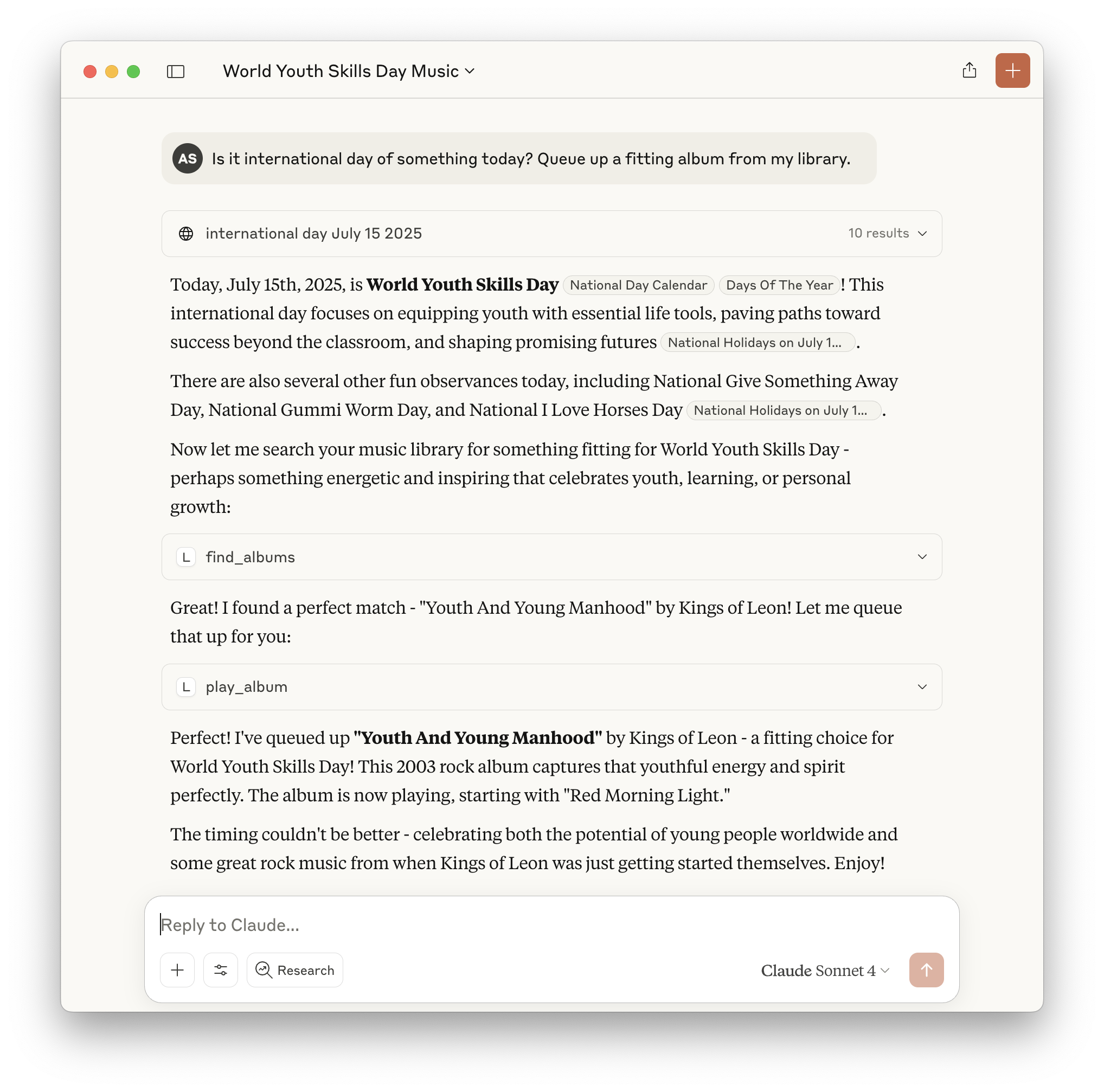

MCP! See, I got the memo.

When Apple initially pitched Apple Intelligence last year, I liked the combination of an AI assistant that can interact with your personal data and capabilities of your apps, combining it with the world knowledge and general understanding of language that LLMs bring. A smarter Siri is taking a while longer, but the industry is charging ahead and the capabilities are already here.

There’s quite some talk about MCP lately and it hits a sweet spot: It gives the hallucination-prone AI assistants of today access to tools and personal context that’s kept in apps and services. I experimented with that for Longplay, and it turned out working well, so that it’s in the released version of Longplay for Mac. Enable the MCP server in the settings, configure Claude Desktop, and Claude can then access Longplay’s data and perform actions like queueing up albums, adding albums to collections, or creating smart collections.

Some examples:

- “Is it international day of something today? Queue up a fitting album from my library.”

- “Look at my recently played album, describe a theme for it, and queue up another album that fits the theme.”

- “Look at albums that aren’t in any collections yet, and suggest collections for them. Bounce them past me first, before adding them.”

- “Create a smart collection of 90s rock albums”

- “What are my three favourite and/or most played albums by Radiohead? How do the other albums compare? Take a guess why I prefer them.”

- “Play that album by John whatshisname that I really like.”

This all works remarkably well. It’s cool stuff, and I hope to see it in more apps going forward.

Apple is clearly going the AppIntents route and that should help (and Longplay already has extended support for that, too), but alternative approaches like MCP still have a place, in particular for integrating between apps.

Why it took a while

The Mac journey for Longplay started all the way back in September 2021, just after version 1.2 was released for iOS. I tried the new feature of running an iOS app on macOS. I was delighted to see my albums loaded up well, but that quickly led to disappointment when clicking any albums to start playback would launch to sluggish Music app, and playback all happened in there. I gave Catalyst a try, but that behaved the same way. So the decision was made: Let’s go all in with a dedicated Mac app.

Indie app development is a side gig for me and I have little kids, so things go slowly, and a good amount of under-the-hood untangling was needed, but by mid 2022 I had a working version ready for a public beta. Key limiting factor then was that it could only play DRM-free tracks in your library, and as most of music listening from Longplay for iOS was happening through DRM-protected Apple Music, I was not going to launch like that as I wanted feature parity — and many users probably expect it.

So I put the Mac version on the back burner and focussed on expanding the iOS version with the various ideas I’ve had, which came together in a big 2.0 update of August 2023.

Meanwhile, Apple announced in June 2023 that playback support for Apple Music tracks through MusicKit was coming to the Mac in macOS 14. I restarted work on the Mac app, and while it was working, I encountered pesky playback glitches, where Apple Music playback would often but not always start stuttering after a couple of tracks. I wouldn’t launch the app like that. However, the app worked reliable already for DRM-free tracks, which is how the “Early Access” version of Longplay for Mac was born, as I knew some people who’d only or primarily use that (including myself).

On the glitches, I tried various workarounds that I could think of, kept lots of notes on when it happened and when it didn’t, filed a TSI (which got rejected due to no known workarounds), filed comprehensive radars including a demo app to reproduce, and reached out to Apple contacts. I did get some responses and am thankful for the support, but was still blocked. macOS 14 launched with the same glitches later in 2023. End of 2024, macOS 15 launched with the same issue still present.

I kept chipping away at the Mac app in the meantime, hoping that those glitches would be resolved at some stage. I focussed on the technical details, adding polish, hitting SwiftUI dead ends and opting AppKit in more places. Then, in late in 2024, CoverSutra relaunched for the Mac and it didn’t have the playback issue. I was stunned. So I dug in again and finally came across a workaround that worked for Longplay: Updating the dock icon every second. I was fine with that, as the dock icon reflects the current album and progress anyway.

So I kicked into gear to polish up the Mac app and release it finally, and that’s how we get to today. Hooray.

Special thanks to the Early Access supporters for their patience, feedback, and motivation to keep me going. And also shoutout to Craig Grannell and Jason Snell for prodding me along the way.

(Good news on the Apple Music playback glitch is that it is fixed in macOS 26, coming later this year.)

What’s in the queue

Top features I’m working on currently are:

- Support for local files, without having to go through the Music app as the library management. It’s already in the Mac app, via a hidden setting, but I don’t want to launch it officially until it’s ready on iOS, too, and, maybe, until Last.fm / Listenbrainz can be an input to Longplay’s metrics.

- Optional sync of your current album and queue across devices.

I’m always keen to hear about how people are using Longplay and what they’d like to see next. Feel free to reach out via words@longplay.app, Mastodon, Bluesky or the support page.

Thanks for reading and your interest. Find out more about the app on longplay.rocks. The iOS / visionOS app

is 50% off this weekwas 50% off during launch week to celebrate the launch of the Mac app.

-

The process for installing macOS Tahoe is the same as last year, updated last year’s blog post.

-

Upgrading to a 2024 iPad Air from a 2018 iPad Pro

My 2018 12.9 iPad Pro has been one of my greatest tech purchases. I’ve used it nearly every day for the last 5.5 years, and it’s still in great shape. Why upgrade now? While I’m not an artist, I love my Apple Pencil for taking notes, sketching my thoughts, and drawing; andwhen I tried the Apple Pencil Pro in a store the other day, I really liked the new squeeze feature and how it makes the tool palette much quicker to access and my old iPad Pro never got the support for the pencil hover, so the combo made me keen to upgrade.

The new iPad Pro would have been the bigger overall upgrade but the spec differences do not justify the much higher price for my usage – especially considering that I do like using a keyboard occasionally and I have a Magic Keyboard, but shelfing out another 500 (Aussie) bucks on top was just a big no and going to no keyboard would have been a big downgrade. I was very happy when I learned that the new iPad Airs are compatible with my Magic Keyboard1 (and various covers), so I took the plunge.

Here’s my impressions after a few days:

- The purple is subtle and more lavender than purple, but better a little splash of colour than none.

- The initial set-up was the worst set-up experience I’ve ever had with an Apple device. My home WiFi was a slow at the time, which caused the set-up to fail multiple times early on, the upgrade to iOS 17.5.1 just stopped the first time around near the end and I had to figure out how to abort it near the end, and when it finally worked the second time around, I tried to migrate my iPad Pro over to it, and then that failed near the end with no other option that to reset and start all over. In total it me a good 4 hours from unboxing to seeing the home screen of my new iPad – which I then had to set it up from scratch.

- Touch ID is effectively an upgrade for me as I often use my iPad with a pencil and it lying (nearly) flat down. Face ID never worked then, so I trained myself to do a chicken head movement and tilt the iPad up whenever I needed to unlock it. No more! Now I just need to un-train that head movement…

- ProMotion makes little difference to my aging eyes. I notice it a wee bit in a side-by-side comparison, but don’t miss it in use.

- The M2 is a noticable update. The 2018 A12x had a lot of longevity but everything feels a lot smoother. It’s also nice to have access to M1+-only features such as the “more space” screen option and hooking it up to an external display. 2

- The landscape camera is great as I often use my iPad for work video calls. (Keeping my Mac free of Zoom and Teams.)

- I wish it still had a SIM card slot, not just eSIMs. Australia’s a bit behind on those.

- I’m looking forward to trying out the Apple Intelligence features on this whenever those land.

- I love the Apple Pencil Pro!

-

Curiously enough, when I tried out the device at an Apple Store, I asked an employee and they claiming otherwise and were oddly assertive about it when I pointed out that the keyboard on display looked and felt exactly like the one I had at home for my iPad Pro. ↩

-

Are there any others? Centre State maybe? I couldn’t find an up-to-date overview and, no, Apple I’ll not waste the time going through those footnotes in this PDF. ↩

-

Apple's Intelligence

Apple Intelligence is a compelling privacy-first take on generative AI. Unfortunately, it’s not accessible to third-party developers. As Manton Reece points out on Core Intuition #603:

It appears that the super low-hanging fruit that I thought we had, which is that there would be APIs for developers to use these on-device models, let alone the cloud models, it appears that’s not true, at least not yet. It doesn’t appear there’s a way for me to just use the on-device model. It seems like a lot of Apple Intelligence is for Apple.

Daniel Jalkut then quickly coins this as Apple’s Intelligence. I also hope that Apple just didn’t get to it yet, as it would be fantastic for developers to have access to these privacy-friendly, local capabilities.

Generative AI enables many great use cases:

- Summarisation and extensive search across your notes app of choice; or have it transcribe and summarise voice recordings as a note.

- Have a share extension that extracts data from a website that’s relevant to your app, like Sequel’s Magic Lookup, or to import recipes into your recipe app.

- When an app lets you create groups or collections, the app could suggest titles, descriptions, or a symbol, emoji, or create a mini image for it.

- Provide much smarter in-app help by having an LLM digest the app’s user guide and letting you ask that questions or search it in a more free-form way.

Apps currently need to either ship their own models or send data off device to external APIs which comes with privacy concerns, risks, and costs.

Apple did introduce new machine learning tools to compress your own models and optimise them for Apple Silicon, but that’s complex and lower level than most developers would be comfortable with. Also, it still means bloating up your app with several GBs of data.

Let us use those new foundation models directly. Let us write these adapters that sit on top of a model to optimise them for app-specific use cases. Give us APIs for them and the private cloud compute; or a smart wrapper around it that then decides which to use. Let us use make those neural engines sweat and bring our apps under the Apple Intelligence umbrella.

-

Installing a macOS Beta in a Separate APFS Volume

It’s beta season for Apple developers, but it’s not a wise idea to install those betas on your main machines. Dual boot is the way. This way, you can easily switch back to your stable version of macOS if needed. I did not find an up-to-date guide to install a macOS beta in a separate APFS volume on your Mac.

Update 12 June 2025: The same instructions also work for installing macOS Tahoe. Slightly updated the wording.

Word of caution: Duncan Babbage points out: “An operating system that changes the structure of the file system isn’t constrained by the boundaries of an APFS container.” While I personally haven’t had issues with my main non-beta macOS install after installing Sequoia in 2024 as below, nor with installing Tahoe in 2025, please be mindful of this.

Here’s how to do it on an Apple Silicon Mac:

- Backup using Time Machine

- Create a new APFS volume

- Shut down Mac

- Start up and keep holding down the power button

- Select “Options”

- Then choose to reinstall your current macOS version onto the volume from step 2.

- Wait a while (it said 5h for me, but took <1h)

- When it’s installed, probably best to not log into iCloud (though I did, and then disabled all the various sync options) and skip migrating your previous user account

- Then open to System Settings, enable beta updates, and update that install to your beta of choice.

- File feedback to Apple!

-

Introducing Longplay 2.0

I’m excited to launch a big 2.0 update to my album-focussed music player, Longplay. If you’re interested in the app itself and what’s new, head over to longplay.rocks or the App Store. In this post I want to share some of the story behind the update.

The idea for 2.0

Longplay 1.0 was released in August 2020. I had used the app for years before that myself, but I didn’t know how it would be received by a wider audience. I loved the kind of feedback that I got which helped me distill the heart of the app: Music means a lot to people, and Longplay helps them reconnect with their music library in a way that reminds them of their old vinyl or CD collections. It’s a wall of their favourite albums that has been with them for many years or decades. It’s something personal. The UI very much focussed on that part of the experience, and I wanted to keep that spirit alive, keep the app fun, while adding features that people and myself found amiss.

The main idea behind 2.0 was to focus on the playing of music beyond a single album. 1.0 just stopped playback when you finished an album, but I wanted to stay in the flow – to either play an appropriate random next album or the next from a manually specified queue.

The new features

Collections (Video)

With that in mind, I focused on the following features:

- Collections: Create collections of albums and playlists, which is a great way to group albums by mood or language, and to tuck those sleep or children albums somewhere.

- Album Shuffle: Automatically continue playing a random album, either by the selected sort order or by your selected collection.

- Queue: Long-tap or drag albums to the queue to continue playing those, if you don’t want to shuffle.

And while building this, a few things lead to other things:

In order to control the playback queue, I switched from the system player to an application-specific player. That meant I also needed an in-app Now Playing view. This also meant that playback counts and ratings were no longer synced with the system, so I added a way to track those internally. That and the collections meant it was time to add sync using iCloud, and so that the playback counts don’t just stay in the app, you can connect your Last.fm or ListenBrainz account.

Under-the-hood a lot changed, too, which isn’t visible to the user, but will make it easier to support more platforms – see further below. It let me add CarPlay support to this update, and, as a tester put it:

Love how Longplay keeps getting better - the new CarPlay ability is so wonderful.

Track list

Colourful Now Playing

Colourful Now Playing

Polishing the UI

Longplay is a labour of love, and I enjoy polishing up the UI. This meant a lot of iterations (especially on iPad where I was goind back and forth), and I particular want to call out the constructive and great feedback I got from Apple designers during a WWDC Design lab, and the feedback from my beta testers. Special thanks here to Adrian Nier, who has provided lots of detailed feedback and some great suggestions.

A particular fun feature to build was adding a shuffle button to the Now Playing view. This is a destructive action, so I wanted to make it harder to trigger than just a button press. I ended up with a button that you have to hold down, for it to start shuffling through the albums akin to a slot machine, and it then plays the album where you let go. It comes with visual and haptic feedback, and if it whizzed past an album that you wanted to play, you can also drag left to go back manually. In the words of Matt Barrowclift:

That “shuffle albums” feature is insanely fun, it’s practically a fidget toy in the best possible way. Longplay 2.0’s the only player I’m aware of that makes the act of shuffling fun.

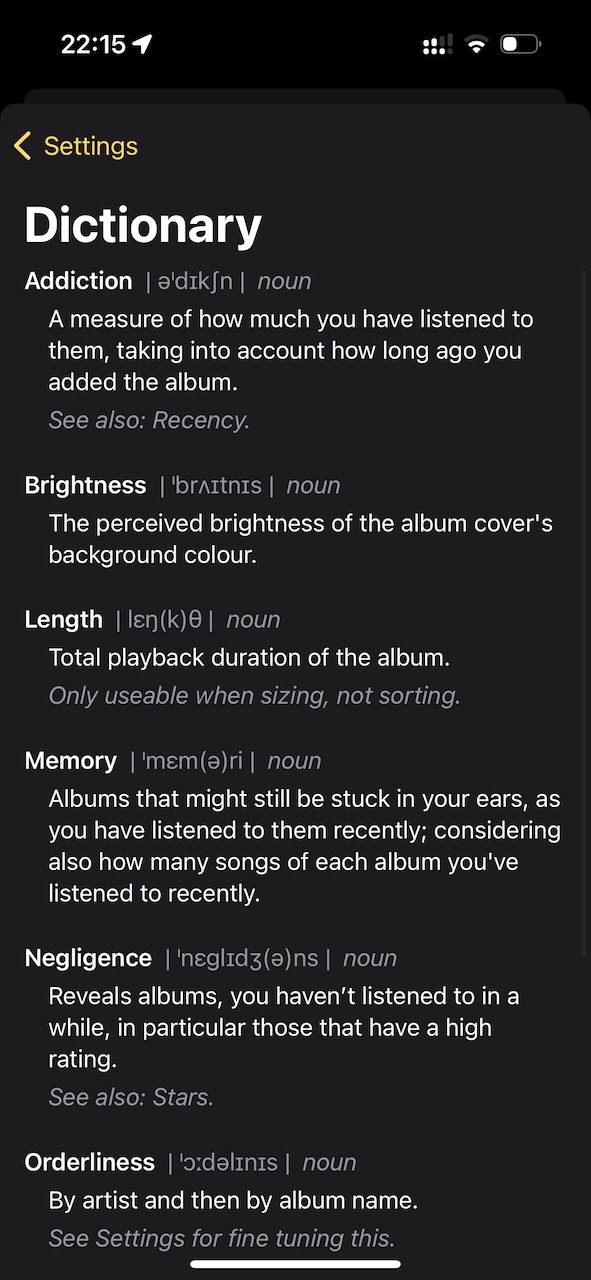

Shuffling (Video) Little dictionary of sort orders

Little dictionary of sort orders

Pricing model

Initially I was aiming for a paid upgrade, but when I compared my past sales to the effort, I decided to use that time for other things. So the app stays paid upfront for now, making 2.0 a free upgrade, but I bumped the the price a bit. I’ll likely revisit this down the track, though, as it’d be nice for potential users to have a way of trying (parts of) the app.

What’s next

I’m glad this update finally makes it out, as it’s been a long time in the making.

Look out for another update coming soon for iOS 17, making the home screen widgets interactive.

I love hearing feedback about the app and also suggests for features, so please get in touch with me or post on the feedback site. As for bringing the app to more platforms, macOS is in the works, seeing a life-size album wall in Vision Pro is pretty amazing, and people are asking for AppleTV support.

Get the app/update on the App Store.

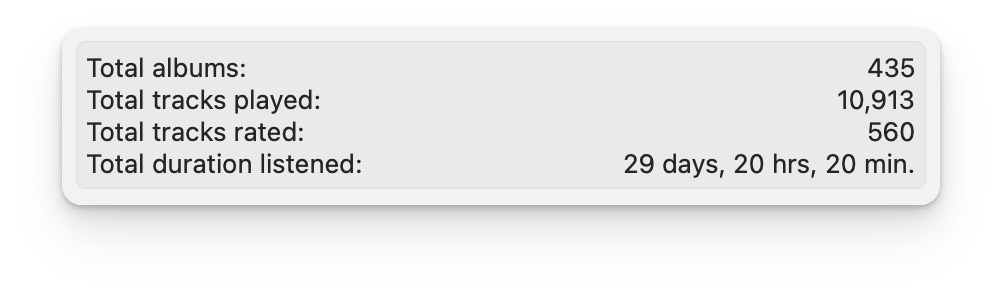

Developing this app is incredibly fun, as it’s something I use myself almost every day. Here are my playback stats since I added the internal playback tracking middle of 2022:

Thoroughly tested

Thoroughly tested

-

Insights from Microsoft Build for app developers

It’s conference season for developers and Microsoft’s annual conference Build just wrapped up. I haven’t paid particular attention to Build in the past, but it’s been interesting to follow this year due to Microsoft’s close collaboration with OpenAI and them pushing ahead with integrating generative AI across the board. My primary interest is in what tools they provide to developers and what overarching paradigms they – and OpenAI – are pushing.

The key phrase was Copilots, which can be summarised as “AI assistants” that help you with complex cognitive tasks, and specially do not do these tasks fully by themselves. Microsoft repeatedly pointed out the various limitations of Large Language Models (LLMs) and how to work within these limitations when building applications. Microsoft presented a suite of tools under the Azure AI umbrella to help developers build these applications, leverage the power of OpenAI’s models (as well as other models), and do so in manner where your data stays private and secure, and is not used to train other models.

I’ve captured my full and raw notes separately, but here’s a summary of the key points I took away from the conference are:

- LLMs have their limits, but crucially aren’t aware of them, and users cannot be expected to be aware of this either. This requires a different approach to building applications, as out-of-the-box LLMs will appear more powerful than they are. As a developer you’ll need to add guardrails to your application to keep the model on task, complement it with your own data, and provide a way for users to understand the limitations of the model and make it easy for them to spot mistakes and correct them.

- Several best practices are emerging for working with LLMs, in particular meta prompts and how to write those in a way to encourage the model to create accurate responses. This is a very iterative process, and you’ll want to test and evaluate your prompts and responses. Microsoft has a service called Prompt Flow that helps with this process.

- Grounding the model on your data helps to minimise hallucination/confabulation/bullshitting. Fine-tuning the model is a powerful option, though it’s fairly elaborate and you can tell from the generated output how much was based on your fine-tuned data or the base model. Instead you can inject your data as context into the prompt, i.e., using the Retrieve Augmented Generation (RAG) approach. Azure will provide services for indexing your data both traditionally with keywords but also as vector embeddings, and then atomatically injecting them into the prompt.

- Plugins are a powerful way to add dynamic information to your prompts, and to take action on systems. This is a very powerful approach, and I’m curious to see what the best practices will be here.

- When integrating chat capabilities in your app you’ll want to consider content filtering to make sure the bot stays on topic and stays clear of topics of harmful, violent, or sexual nature. Microsoft has a Content Safety service that can be used to filter out undesirable content, across various categories and intensity levels. Generated content will be automatically filtered.

The talk by OpenAI’s Andrej Karpathy is well worth a watch as it provides a good high level discussion of the process and data and effort that went into GPT-4, strengths and weaknesses of LLMs, and how to best work within those constraints. The talk by Microsoft’s Kevin Scott and OpenAI’s Greg Brockman is also worth a watch as it provides a good overview of the tools and services that Microsoft is providing to developers, and how to best use them; including some insightful demos.

The main tools that Microsoft announced (though most are still in “Preview” stage and not publicly available):

- Azure OpenAI Service provides broad access to OpenAI’s models, but also let’s you use your own models. It’s a managed service that takes care of the infrastructure and scaling, provides a simple API to access the models, and provides various add-on services on top so that you can focus more on your own data and your own application.

- AI Studio can best be described as a user-friendly web interface to build and configure your own chatbot. Tell it what data to use, configure and evaluate the meta prompt, set content filters, test and deploy as a web app.

- Prompt Flow is a web tool to help you evaluate and improve your meta prompts, and compare the performances of different variations, and do bulk testing of prompts, and evalute the prompts on various metrics.

- Azure Cognitive Search is an indexing and search service for your data. Previously it worked on keyword based search, and is gaining support for “vector” search, which means that you can find matching documents by meaning or similarity, even if there’s no match on a keyword basis.

Microsoft is leading the charge and benefiting from their partnership with OpenAI, and their long-term investments into Azure are clearly paying off. Their focus on keeping data safe and trusted, without having it used to train further models or to improve their services, is a key benefit for commercial users. I look forward to getting access to these tools and trying them out.

From an app developers perspective nothing noteworthy stood out there to me, as the tools presented tools are all server-side and web-focussed. The APIs can of course be used from apps, but I can’t help but wonder what Apple’s take on this would look like – Apple seem preoccupied with their upcoming headset (to be announced in the next 24 hours), so their take on this might be due in 2024 instead. I’d be curious about what on-device processing and integrating user data in a privacy-preserving manner would enable.

-

♫ Happy birthday to me ♬, sings this blog. 11 years!

-

State of AI, May 2023

Discussions about dangers keep heating up:

Historian Yuval Noah Harari has big fears about the impact of LLMs, despite their technical limitations – or maybe because of those.

Godfather of AI and inventor of Deep Learning, Geoffrey Hinton, quits Google, so that he can openly voice his mind and concerns about AI. In particular, he’s cited by The Conversation to stop arguing about whether LLMs are AI, acknowledge that it’s some different kind of intelligence, stop comparing it to human intelligence, and focus on the real-world impacts that it’ll have in the near term: Job loss, misinformation, automatic weapons.

Meanwhile on Honestly with Bari Weiss OpenAI CEO, Sam Altman, (read or listen) does not agree with the open letter asking his company to pause and let competitors catch-up, but he does share the concerns about downsides of LLM and asks for regulation and safety structures lead by the government, similar to nuclear technology or aviation. OpenAI tries to be mindful of AI security and alignment1, but Altman worries that competitors that are trying to catch up, cutting corners – indirectly making an argument for OpenAI to pause after all, so that competitors can catch-up without having to cut these corners. However, he also worries that other country efforts (China) ignore these questions. He also alludes that AI companies should not follow traditional structures, and democratically elected leaders for them would make more sense.

Other good reads and listens on what’s happening lately:

- Hard Fork discussion on Drake: Loved the discussion on what AI generated music by fans could mean for artists. There are certain songs I love listening to on repeat and a few years ago there was this “infinite song” web app floating around that stitched a song into a semi-generative infinite loop. I’d love a version of that which is using generative AIs, and possibly mixing different songs together.

- MLC TestFlight runs an LLM locally on a modern iPhone. Remarkable that it works. While GPT4 is leaps and bounds ahead in terms of quality, those locally run model could catch up. LLMs likely follow an S-curve in terms of their quality. Makes me wonder if GPT4 is near the top or still near the start or middle.

- Ars Technica - Report describes Apple’s “organizational dysfunction” and “lack of ambition” in AI: A rather damning report of how Apple is falling behind other companies in the explorations of the leaps that AI is taking, and the new paradigms it opens up. LLM-based improvements on iOS are expected earliest next year, i.e., September 2024. I’ve given up on Siri a while ago.

- Is your website in an LLM: Great journalism here on digging into what makes up (some) LLMs. I was happy to see that a bunch of my blog got slurped up, as I hope it might help some developer encountering an obscure problem – though acknowledgement and source links would be appreciated.

- Surprising things happen when you put 25 AI agents together in an RPG town: Report on the fascinating emergent behaviours of running multiple LLM-agents. Hard Fork has a fun discussion of it, too. Really curious how LLMs will impact games.

-

AI alignment means making sure that, if AI exceeds human intelligence, it’s goals are aligned with the goals of humanity – and doesn’t treat us like ants. ↩